How to Deploy a single master Kubernetes cluster with Ansible

Kubernetes is one of the most popular open-source and enterprise-ready container orchestration systems. It’s used to automate the deployment, scaling, and management of containerized applications. Manual Kubernetes installation is a laborious and error-prone process. However it can be dramatically simplified by using configuration management tools such as Ansible. This article demonstrates how to deploy a full-function Kubernetes cluster using Ansbile with our installation package.

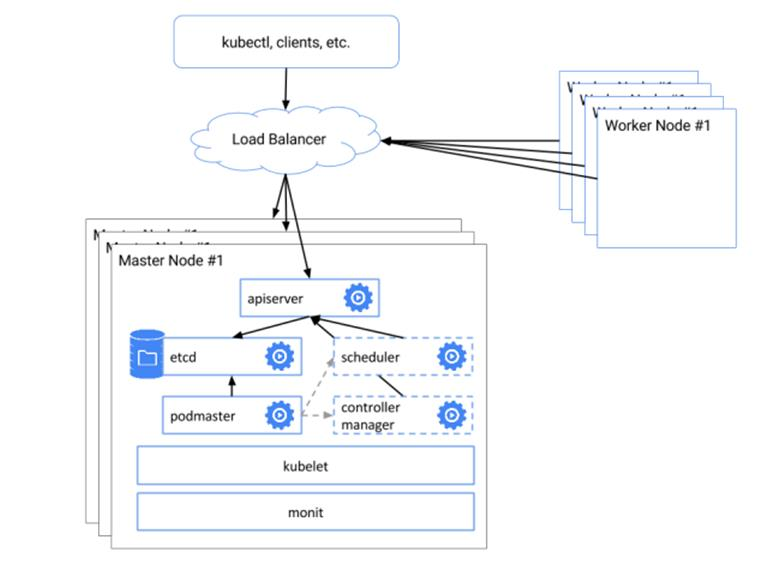

Cluster Designing

Our Kubernetes cluster consists of three servers. One of them will be working as Kubernetes Master. The other two are worker nodes. All servers are in the same internal network, 192.168.100.0/24. Software components the cluster depends on are Kubernetes, Etcd, Docker, Calico, Flannel, Helm and Nginx-ingress-controller.

Prerequisites

The servers have the following requirements:

- Each servers ,it can bare-metal or virtual server, has at least 2 CPU/vCPU cours, 4GB RAM and 10GB disk space, with Ubuntu 16.04 LTS or CentOS/RHEL 7 installed.

- All servers are in the same network and able to see each other.

- Ansible Server can be setup one one server with Ansible v2.4 (or later) and python-netaddr installed in the same network.

- Internet access is available for all servers to download software binaries.

- Root user remote login has to be enabled on all servers except the Ansible Server.

Architecture

The following is our server inventory and architecture design.

| Server Name | IP Address | Roles |

| Master01 | 192.168.100.10 | Master & Ansible Server |

| Node01 | 192.168.100.20 | Worker node |

| Node02 | 192.168.100.30 | Worker node |

Kubernetes Cluster Installation

Preparing All Servers

For Ubuntu 16:

1.Install python2.7

$ sudo apt update

$ sudo apt install python2.7 -y

$ sudo ln -s /usr/bin/python2.7 /usr/bin/python

2.Set root remote access

$ sudo passwd root

$ sudo sed -i 's/prohibit-password/yes/' /etc/ssh/sshd_config

$ sudo service ssh restart

For Centos 7:

Install python2.7

# yum install epel-release -y

# yum update -y

# yum install python -y

Setting Up Kubernetes Cluster

All steps are performed on the master server

1.Set up Ansible Server

The master server is setup as the Ansbile Server

For Ubuntu 16:

$ apt-get install git python-pip -y

$ pip install pip --upgrade

$ pip install --no-cache-dir ansible

You might encounter the following error

Traceback (most recent call last):

File "/usr/bin/pip", line 9, in

from pip import main

ImportError: cannot import name main

A quick fix is to modify /usr/bin/pip, finding the following section

from pip import main

if __name__ == '__main__':

sys.exit(main())

and replacing it with

from pip import __main__

if __name__ == '__main__':

sys.exit(__main__._main())

Re-run the command

$ pip install --no-cache-dir ansible

For CentOS 7:

# yum install git python-pip -y

# pip install pip --upgrade

# pip install --no-cache-dir ansible

2.Enable Password-less SSH

Generate the SSH keys on master node:

# ssh-keygen -t rsa -b 2048

Copy the SSH key from master node to all other nodes in the cluster :

# ssh-copy-id 192.168.100.10

# ssh-copy-id 192.168.100.20

# ssh-copy-id 192.168.100.30

3.Download installation package

single-master-k8s-ansible.tar.gz

4.Unpack it to /etc folder

# tar -zxvf single-master-k8s-ansible.tar.gz -C /etc/

5.Modify the Ansible inventory file/etc/ansible/hosts to fit your environment. Usually you need to replace server’s IP addresses and its interface name. If you prefer to use calico as your network component, change network_plugin’s value to calico

# Kubernetes nodes

[kube-master]

192.168.100.10 node_name=master01

[kube-node]

192.168.100.20 node_name=node01

192.168.100.30 node_name=node02

[all:vars]

# Choose network plugin (calico or flannel)

network_plugin="flannel"

# Set up pod network

pod_network="10.244.0.0/16"

# The Exec Files Directory On Kubernetes Nodes

bin_dir="/usr/local/bin"

# Ansible Working Directory

base_dir="/etc/ansible"

# Docker Version

docker_version="v17.03.2-ce"

# Kubernetes version

k8s_version_ubuntu="1.10.0-00"

k8s_version_centos="1.10.0-0"

# Helm Version

helm_version="v2.9.1"

# Kubernetes apps yaml dir

yaml_dir="/root/.kube/kube-apps"

# Kubernetes cert directory

kube_cert_dir="/etc/kubernetes/pki"

# Kubernetes config directory

kube_config_dir="/etc/kubernetes"

6.Setup the Kubernetes cluster

# cd /etc/ansible

# ansible-playbook deploy.yml

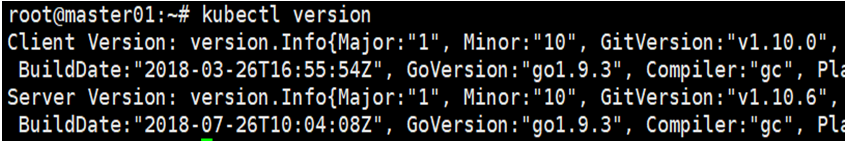

Verify Installation Results

Check Kubernetes version

# kubectl version

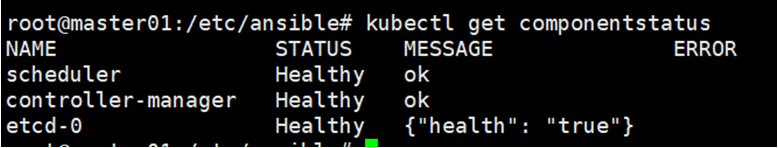

# Check the status of Kubernetes components

kubectl get componentstatus

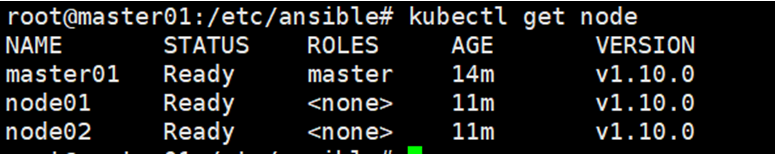

Check the worker node status

# kubectl get node

Check the status of all the pods

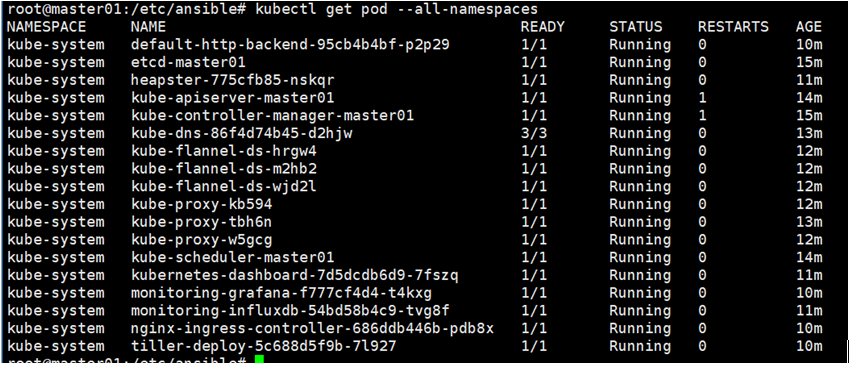

# kubectl get pod --all-namespaces

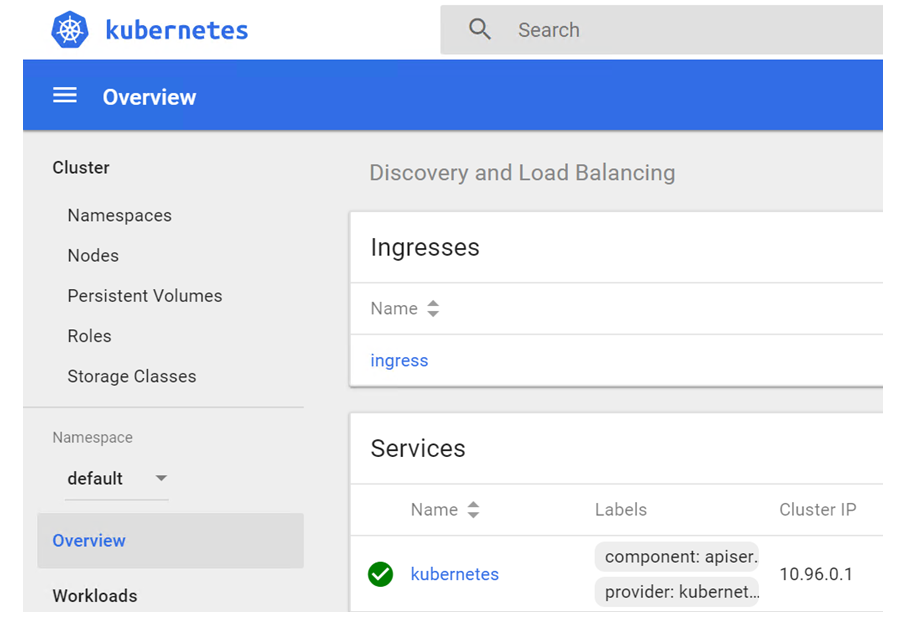

Verifying Kubernetes dashboard

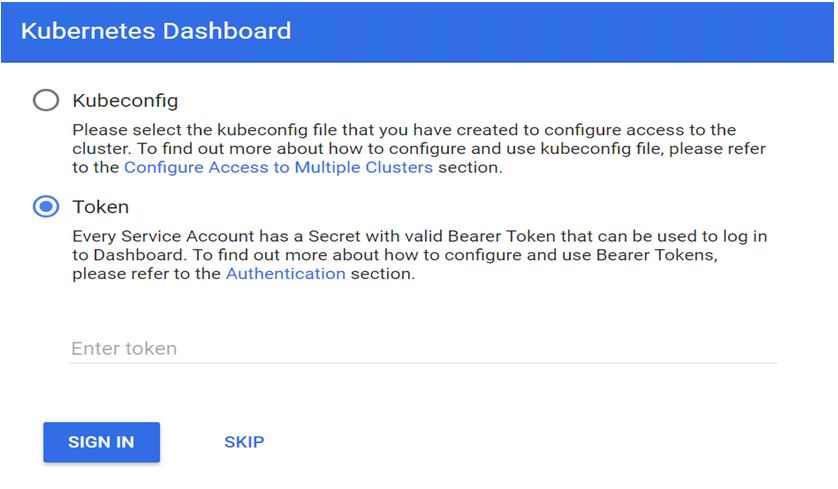

Open https://192.168.100.10:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy in your browser and select Token option

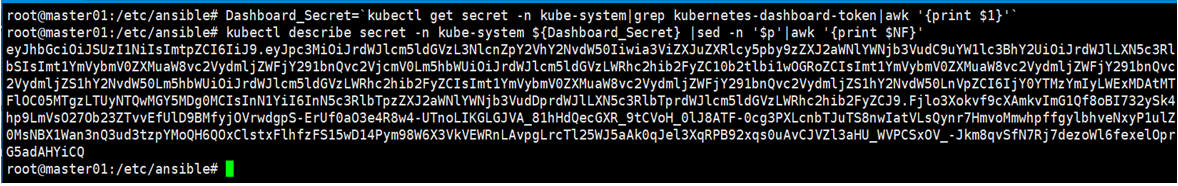

The Dashboard token can be retrieved by running the following command

# Dashboard_Secret=`kubectl get secret -n kube-system|grep kubernetes-dashboard-token|awk '{print $1}'`

# kubectl describe secret -n kube-system ${Dashboard_Secret} |sed -n '$p'|awk '{print $NF}'

Congratulations!

You are done. Now There’s a full-function working Kubernetes cluster to test out. You can explore our other solutions if you want to know more. Thank you!